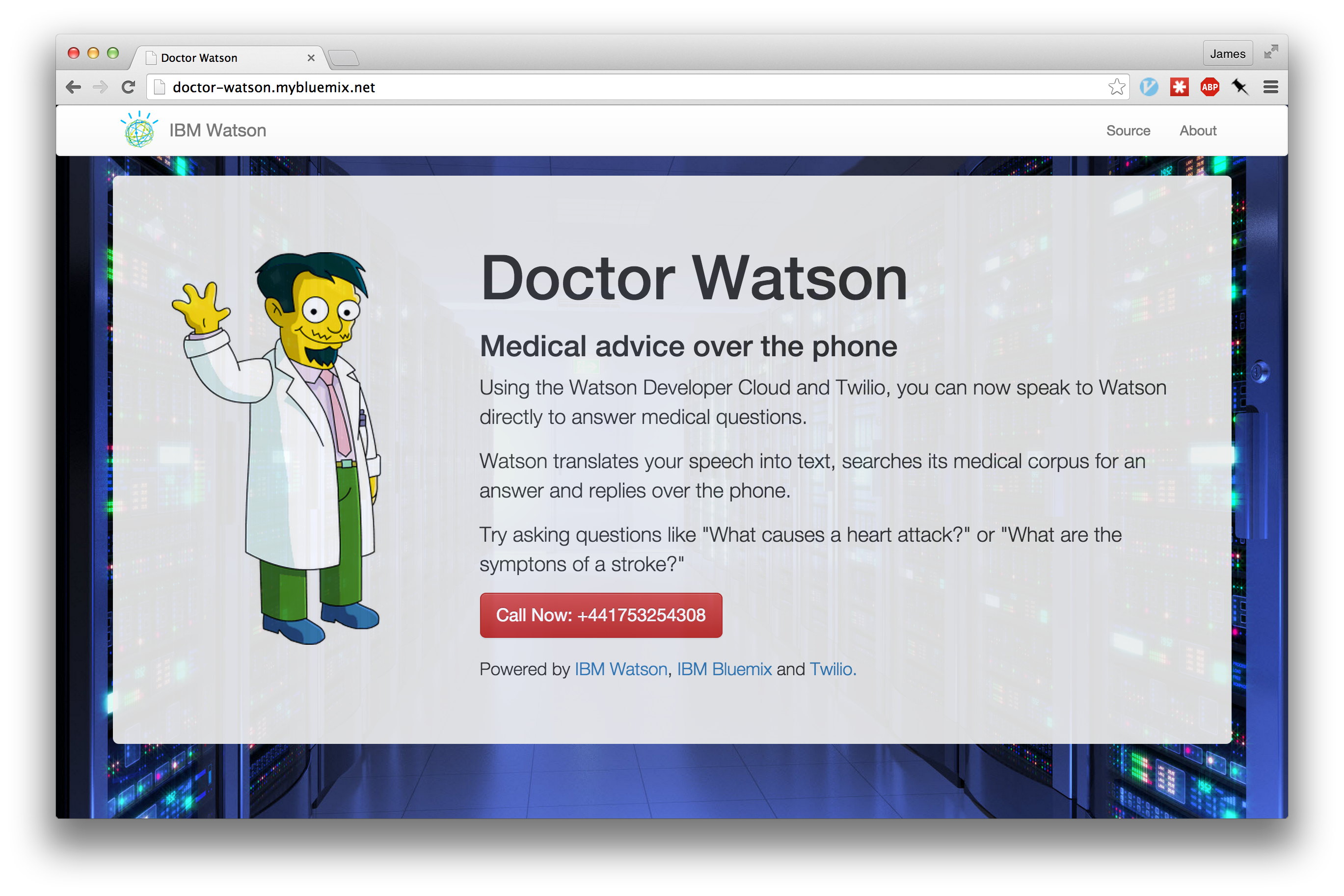

Doctor Watson is an IBM Bluemix application to answer medical questions over the phone, using IBM Watson and Twilio.

Ringing an external phone number, the application will answer and ask for a medical question to help with. Translating your speech into text and using IBM Watson’s Question and Answer service, Doctor Watson will query the medical corpus.

Top rated answers will be converted to speech and used as a response over the phone. Users can continue to ask more questions for further information.

Putting together this complex application, with voice recognition, telephony handling and a medical knowledge base, was incredibly simple using the IBM Bluemix platform.

Source code for the application: https://github.com/jthomas/doctor-watson

Fork and deploy the repository to have your own version of Doctor Watson!

Want to know how the project was built? Read on…

Overview

Doctor Watson is a NodeJS application using Express, a framework for building web applications, to handle serving static content and creating REST APIs.

The front page gives an overview of the application, served from static HTML files with templating to inject the phone number at runtime.

HTTP endpoints handle the incoming messages from Twilio as users make new phone calls. The application’s public URL will be bound to a phone number using Twilio’s account administration.

Services for IBM Watson are exposed for the application using the IBM Bluemix platform. Text to Speech and Question and Answer services are used during phone call handling.

The application can be deployed on IBM Bluemix, IBM’s public hosted Cloud Foundry platform.

Handling phone calls

Twilio provides “telephony-as-a-service”, making applications able to respond to telephone calls and text messages using a REST API.

Twilio has been made available on the IBM Bluemix platform. Binding this service to your application will provide the authentication credentials to use with the Twilio client library.

Users register external phone numbers through the service, which are bound to external web addresses controlled by the user. When a person dials that number, the service makes a HTTP call with the details to the application. HTTP responses dictates how to handle the phone call, allowing you to record audio from the user, redirect to another number, play an audio file and much more.

We’re using ExpressJS to expose the HTTP endpoints used to handle incoming Twilio messages. Twilio’s client libraries abstract the low-level network requests behind a JavaScript interface.

This code snippet shows the outline for message processing.

app.post('/some/path', twilio.webhook(twilio_auth_token), function(req, res) {

// req.body -> XML body parsed to JS object

// do some processing

var twiml = new twilio.TwimlResponse();

twiml.command(...); // where command is a 'verb' from TwilML

res.send(twiml);

});

TwilML is Twilio Markup Language, an XML message with instructions you can use to tell Twilio how to handle incoming phone calls and SMS messages.

TwimlResponse instances generate the XML message responses. Primary verbs from the TwilML specification are available as chainable function calls on the class instance.

Doctor Watson Call Flow

When the user first calls the phone number, Twilio sends a TwilML message over a HTTP POST request to http://doctor-watson.mybluemix.net/calls.

router.post('/', twilio.webhook(twilio_auth_token), function(req, res) {

log.info(req.body.CallSid + "-> calls/");

log.debug(req.body);

var twiml = new twilio.TwimlResponse();

twiml.say('Hello this is Doctor Watson, how can I help you? Press any key after you have finished speaking')

.record({timeout: 60, action: "/calls/recording"});

res.send(twiml);

})

We’re using the TwilML response to give the user information on asking a question. Recording their response, the audio file with their question will be sent in another request to the ‘/calls/recording’ location.

router.post('/recording', twilio.webhook(twilio_auth_token), function(req, res) {

var twiml = new twilio.TwimlResponse();

enqueue_question(req.body);

twiml.say("Let me think about that.").redirect("/calls/holding");

res.send(twiml);

})

Using the audio file with the user’s question, available as the request body, we now schedule a call to the Watson services.

With the question answering request in-progress, we redirect the user into a holding loop.

router.post('/holding', twilio.webhook(twilio_auth_token), function(req, res) {

var twiml = new twilio.TwimlResponse();

twiml.pause({length: 5})

.say("I'm still thinking")

.redirect("/calls/holding");

res.send(twiml);

});

Every five seconds, we relay a message over the phone call until the answer has been returned.

Within the callback for the Question and Answer service, we have the following code.

var forward_to = cfenv.getAppEnv().url + "/calls/answer";

client.calls(call_ssid).update({url: forward_to});

This uses the Twilio client to update the location for a live call, redirecting to the location which returns the answer.

router.post('/answer', twilio.webhook(twilio_auth_token), function(req, res) {

var twiml = new twilio.TwimlResponse();

twiml.say(answers[req.body.CallSid])

.say("Do you have another question?")

.record({timeout: 60, action: "/calls/recording"});

res.send(twiml);

})

Now we’ve shown how to handle phone calls, let’s dig into the Watson services…

Using the Watson Services

IBM Bluemix continues to roll out more services to the Watson catalogue. There are now fifteen services to help you create cognitive applications.

Doctor Watson uses Speech to Text and Question and Answer.

All services come with great documentationn to get help get you started, with sample code, API definitions and client libraries for different languages.

We’re using the NodeJS client library in Doctor Watson.

Converting audio recording to text

When we have a new audio recording containing the user question, this needs converting into text to query the Watson Q&A service.

Twilio makes the recording available at any external URL as a WAV file with an 8Khz sample rate. Watson’s Speech to Text services has a minimum sample rate for audio input for 16Khz.

Before sending the file to the service, we need to up-sample the audio.

Searching for a Node package that might help, uncovered this library. Using the SOX audio processing library, we can easily convert audio files between sample rates.

var job = sox.transcode(input, output, {

sampleRate: 16000,

format: 'wav',

channelCount: 1

});

job.on('error', function(err) {

log.error(err);

});

job.on('progress', function(amountDone, amountTotal) {

log.debug("progress", amountDone, amountTotal);

});

job.on('end', function() {

log.debug("Transcoding finished.");

});

job.start();

This package relies on the SOX C library, which isn’t installed on the default host environment in IBM Bluemix. Overcoming this hurdle meant creating a custom NodeJS buildpack which installed the pre-built binaries into the application runtime. I’m saving the details of this for another blog post…

Using the Watson client library, we can send this audio to the external service for converting to text.

var speech_to_text = watson.speech_to_text({

username: USERNAME,

password: PASSWORD,

version: 'v1'

});

var params = {

audio: fs.createReadStream(audio),

content_type: 'audio/l16; rate=16000'

};

speech_to_text.recognize(params, function(err, res) {

if (err) return;

var question = res.results[res.result_index],

});

… which we can now use to query the healthcare corpus for the Watson Q&A service.

Getting answers

When asking questions, we need to set the correct corpus to query for answers.

var question_and_answer_healthcare = watson.question_and_answer({

username: USERNAME,

password: PASSWORD,

version: 'v1',

dataset: 'healthcare'

});

exports.ask = function (question, cb) {

question_and_answer_healthcare.ask({ text: question}, function (err, response) {

if (err) return ;

var first_answer = response[0].question.evidencelist[0].text;

cb(first_answer);

});

};

This triggers the callback we’ve registered with the first answer in the returned results. Answers are stored as values in a map, with the key as the call ssid, before triggering the redirect to the ‘/calls/answer’ location shown above.

Deploying to Bluemix

Hosting the application on IBM Bluemix uses the following manifest file to configure the application at runtime.

We’re binding the services to the application and specifying the custom buildpack to expose the SOX library at runtime.

Following this…

$ cf push // and Doctor Watson is live!

Check out the source code here for further details. You can even run your own version of the application, follow the instructions in the README.md