Recently I presented my work building “pluggable event providers” for Apache OpenWhisk to the open-source community on the bi-weekly video meeting.

This was based on my experience building a new event provider for Apache OpenWhisk, which led me to prototype an easier way to add event sources to platform whilst cutting down on the boilerplate code required.

Slides from the talk are here and there’s also a video recording available.

This blog post is overview of what I talked about on the call, explaining the background for the project and what was built. Based on positive feedback from the community, I have now open-sourced both components of the experiment and will be merging it back upstream into Apache OpenWhisk in future.

pluggable event providers - why?

At the end of last year, I was asked to prototype an S3-compatible Object Store event source for Apache OpenWhisk. Reviewing the existing event providers helped me understand how they work and what was needed to build a new event source.

This led me to an interesting question…

Why do we have relatively few community contributions for event sources?

Most of the existing event sources in the project were contributed by IBM. There hasn’t been a new event source from an external community member. This is in stark contrast to additional platform runtimes. Support for PHP, Ruby, DotNet, Go and many more languages all came from community contributions.

Digging into the source code for the existing feed providers, I came to the following conclusions….

- Trigger feed providers are not simple to implement.

- Documentation how existing providers work is lacking.

Feed providers can feel a bit like magic to users. You call the wsk CLI with a feed parameter and that’s it, the platform handles everything else. But what actually happens to bind triggers to external event sources?

Let’s start by explaining how trigger feeds are implemented in Apache OpenWhisk, before moving onto my idea to make contributing new feed providers easier.

how trigger feeds work

Users normally interact with trigger feeds using the wsk CLI. Whilst creating a trigger, the feed parameter can be included to connect that trigger to an external event source. Feed provider options as provided as further CLI parameters.

wsk trigger create periodic \

--feed /whisk.system/alarms/alarm \

--param cron "*/2 * * * *" \

--param trigger_payload “{…}” \

--param startDate "2019-01-01T00:00:00.000Z" \

--param stopDate "2019-01-31T23:59:00.000Z"

But what are those trigger feed identifiers used with the feed parameter?

It turns out they are just normal actions which have been shared in a public package!

The CLI creates the trigger (using the platform API) and then invokes the referenced feed action. Invocation parameters include the following values used to manage the trigger feed lifecycle.

lifecycleEvent- Feed operation (CREATE,READ,UPDATE,DELETE,PAUSE, orUNPAUSE).triggerName- Trigger identifier.authKey- API key provided to invoke trigger.

Custom feed parameters from the user are also included in the event parameters.

This is the entire interaction of the platform with the feed provider.

Providers are responsible for the full management lifecycle of trigger feed event sources. They have to maintain the list of registered triggers and auth keys, manage connections to user-provided event sources, fire triggers upon external events, handle retries and back-offs in cases of rate-limiting and much more.

Feed providers used with a trigger are stored as custom annotations. This allows the CLI to call the same feed action to stop the event binding when the trigger is deleted.

trigger management

Reading the source code for the existing feed providers, nearly all of the code is responsible for handling the lifecycle of trigger management events, rather than integrating with the external event source.

Despite this, all of the existing providers are in separate repositories and don’t share code explicitly, although the same source files have been replicated in different repos.

The CouchDB feed provider is a good example of how feed providers can be implemented.

couchdb feed provider

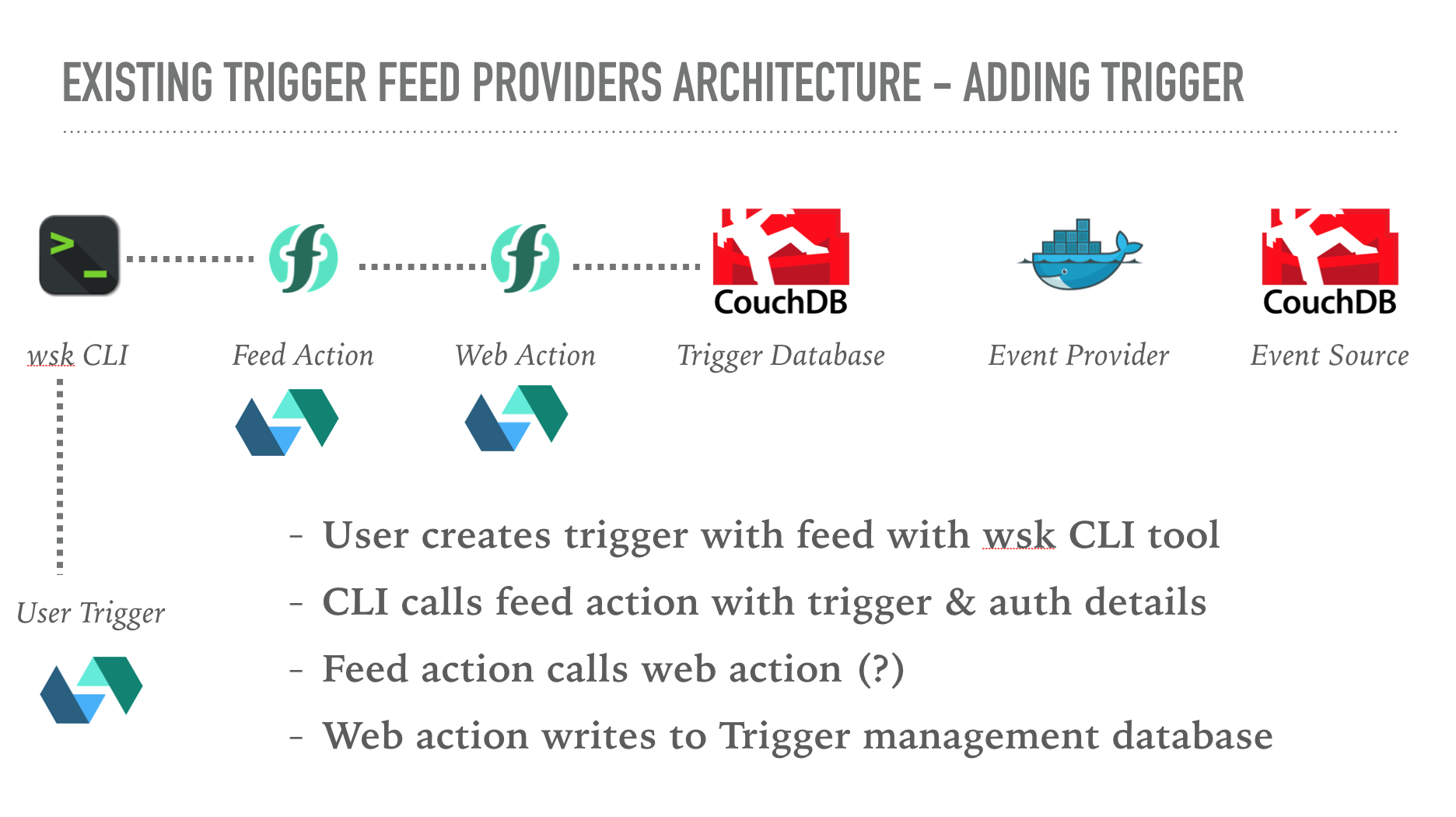

The CouchDB trigger feed provider uses a public action to handle the lifecycle events from the wsk CLI.

This action just proxies the incoming requests to a separate web action. The web action implements the logic to handle the trigger lifecycle event. The web action uses a CouchDB database used to store registered triggers. Based upon the lifecycle event details, the web action updates the database document for that trigger.

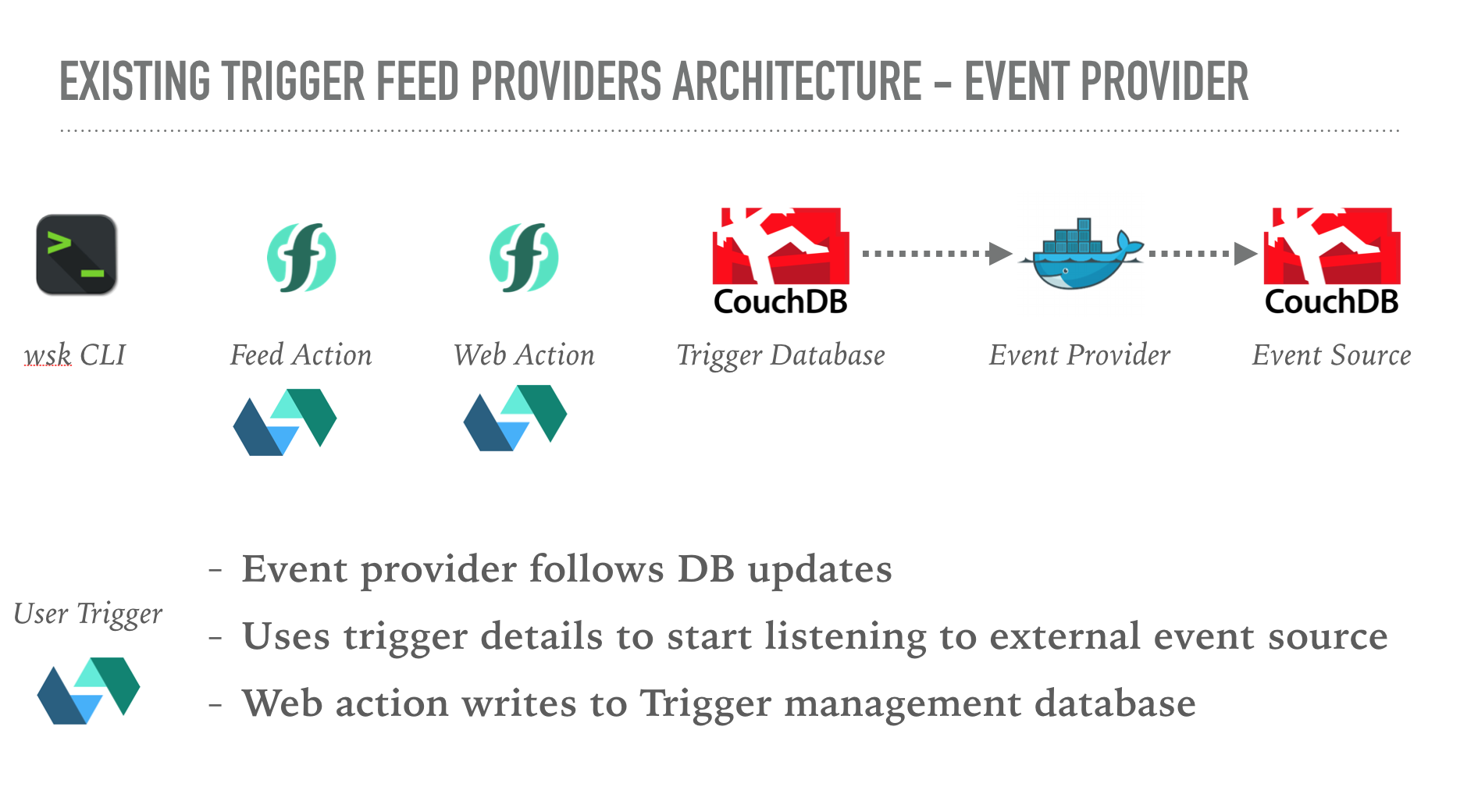

The feed provider also runs a seperate Docker container, which handles listening to CouchDB change feeds from user-provided credentials. It uses the changes feed from the trigger management database, modified from the web action, to listen for triggers being added, removed, disabled or re-enabled.

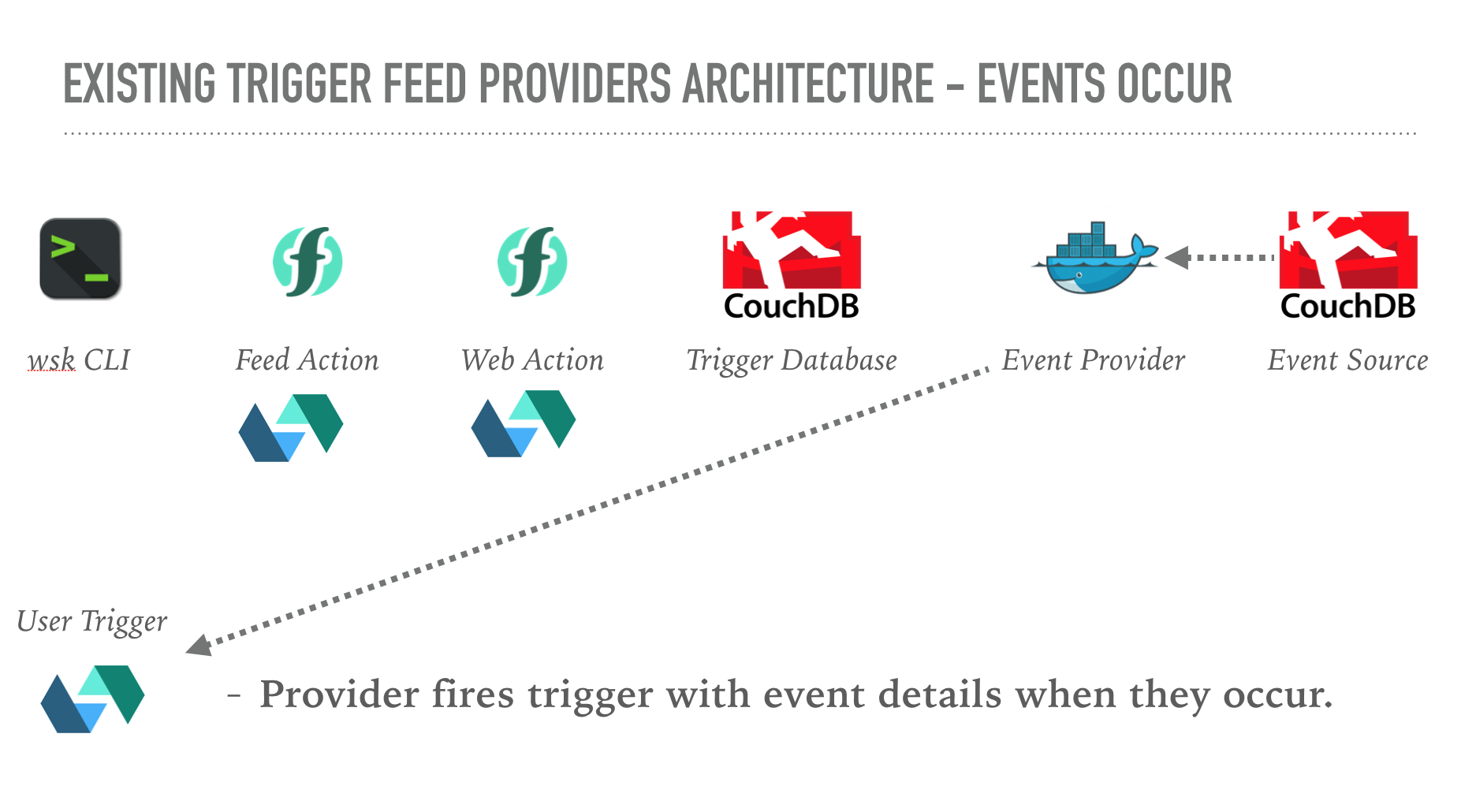

When database change events occur, the container fires triggers on the platform with the event details.

building a new event provider?

Having understood how feed providers work (and how the existing providers were designed), I started to think about the new event source for an S3-compatible object store.

Realising ~90% of the code between providers was the same, I wondered if there was a different approach to creating new event providers, rather than cloning an existing provider and changing the small amount of code used to interact with the event sources.

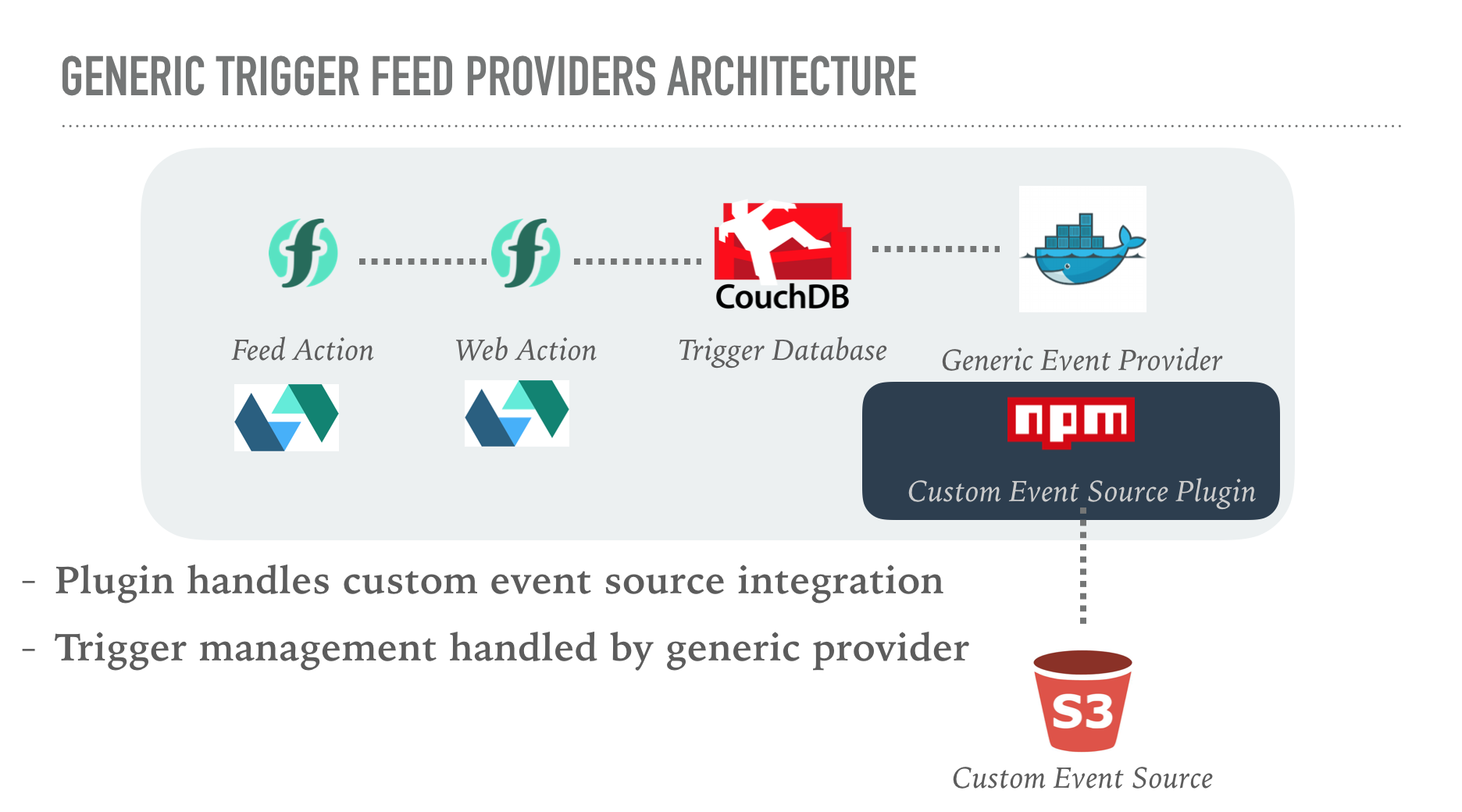

What about building a generic event provider which a pluggable event source?

This generic event provider would handle all the trigger management logic, which isn’t specific to individual event sources. The event source plugin would manage connecting to external event sources and then firing triggers as event occurred. Event source plugins would implement a standard interface and be registered dynamically during startup.

advantages

Using this approach would make it much easier to contribute and maintain new event sources.

-

Users would be able to create new event sources with a few lines of custom integration code, rather than replicating all the generic trigger lifecycle management code.

-

Maintaining a single repo for the generic event provider is easier than having the same code copied and pasted in multiple independent repositories.

I started hacking away at the existing CouchDB event provider to replace the event source integration with a generic plugin interface. Having completed this, I then wrote a new S3-compatible event source using the plugin model. After a couple of weeks I had something working….

generic event provider

The generic event provider is based on the exiting CouchDB feed provider source code. The project contains the stateful container code and feed package actions (public & web). It uses the same platform services (CouchDB and Redis) as the existing provider to maintain trigger details.

The event provider plugin is integrated through the EVENT_PROVIDER environment variable. The name should refer to a Node.js module from NPM with the following interface.

// initialise plugin instance (must be a JS constructor)

module.exports = function (trigger_manager, logger) {

// register new trigger feed

const add = async (trigger_id, trigger_params) => {}

// remove existing trigger feed

const remove = async trigger_id => {}

return { add, remove }

}

// valiate feed parameters

module.exports.validate = async trigger_params => {}

When a new trigger is added to the trigger feeds’ database, the details will be passed to the add method. Trigger parameters will be used to set up listening to the external event source. When external events occur, the trigger_manager can be use to automatically fire triggers.

When users delete triggers with feeds, the trigger will be removed from the database. This will lead to the remove method being called. Plugins should stop listening to messages for this event source.

firing trigger events

As event arrive from the external source, the plugin can use the trigger_manager instance, passed in through the constructor, to fire triggers with the identifier.

The trigger_manager parameter exposes two async functions:

fireTrigger(id, params)- fire trigger given by id passed intoaddmethod with event parameters.disableTrigger(id, status_code, message)- disable trigger feed due to external event source issues.

Both functions handle the retry logic and error handling for those operations. These should be used by the event provider plugin to fire triggers when events arrive from external sources and then disable triggers due to external event source issues.

validating event source parameters

This static function on the plugin constructor is used to validate incoming trigger feed parameters for correctness, e.g. checking authentication credentials for an event source. It is passed the trigger parameters from the user.

S3 event feed provider

Using this new generic event provider, I was able to create an event source for an S3-compatible object store. Most importantly, this new event source was implemented using just ~300 lines of JavaScript! This is much smaller than the 7500 lines of code in the generic event provider.

The feed provider polls buckets on an interval using the ListObjects API call. Results are cached in Redis to allow comparison between intervals. Comparing the differences in bucket file name and etags, allows file change events to be detected.

Users can call the feed provider with a bucket name, endpoint, API key and polling interval.

wsk trigger create test-s3-trigger --feed /<PROVIDER_NS>/s3-trigger-feed/changes --param bucket <BUCKET_NAME> --param interval <MINS> --param s3_endpoint <S3_ENDPOINT> --param s3_apikey <COS_KEY>

File events are fired as the bucket files change with the following trigger events.

{

"file": {

"ETag": "\"fb47672a6f7c34339ca9f3ed55c6e3a9\"",

"Key": "file-86.txt",

"LastModified": "2018-12-19T08:33:27.388Z",

"Owner": {

"DisplayName": "80a2054e-8d16-4a47-a46d-4edf5b516ef6",

"ID": "80a2054e-8d16-4a47-a46d-4edf5b516ef6"

},

"Size": 25,

"StorageClass": "STANDARD"

},

"status": "deleted"

}

Pssst - if you are using IBM Cloud Functions - I actually have this deployed and running so you can try it out. Use the /james.thomas@uk.ibm.com_dev/s3-trigger-feed/changes feed action name. This package is only available in the London region.

next steps

Feedback on the call was overwhelming positive on my experiment. Based upon this, I’ve now open-sourced both the generic event provider and s3 event source plugin to allow the community to evaluate the project further.

I’d like to build a few more example event providers to validate the approach further before moving towards contributing this code back upstream.

If you want to try this generic event provider out with your own install of OpenWhisk, please see the documentation in the README for how to get started.

If you want to build new event sources, please see the instructions in the generic feed provider repository and take a look at the S3 plugin for an example to follow.